Definition

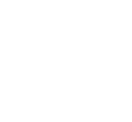

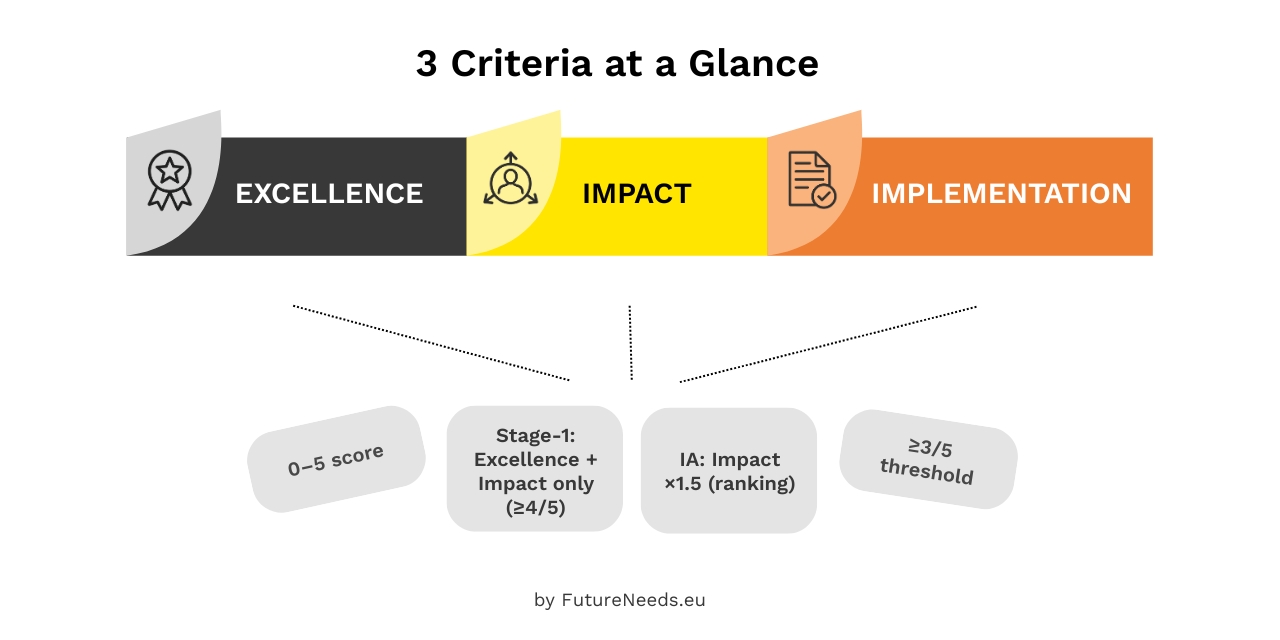

Horizon Europe proposals are read and scored by independent experts against three criteria: Excellence, Impact, and Quality and efficiency of the Implementation. Each criterion gets a 0–5 score (in 0.5 steps). Standard thresholds: 3/5 per criterion and 10/15 overall. Innovation Actions apply Impact ×1.5 for ranking. In two-stage calls, stage-1 checks only Excellence and Impact (each must reach 4/5) before you’re invited to stage-2.

Key Takeaways

1. Write to the criteria, not to assumptions.

Excellence: set clear, ambitious objectives that go beyond the state of the art. Show a sound methodology. Address Open Science, the gender dimension, and SSH where relevant (briefly justify if they’re not).

Impact: draw a credible pathway from your results to the topic’s expected outcomes and the destination impacts. Quantify scale (how many, how far) and significance (why it matters). Name the barriers and how you will deal with them. Set concrete D&E&C actions and a fit-for-purpose IP strategy.

Implementation: present a logical work plan, realistic effort per WP, sensible risks and mitigations, and a consortium that actually matches the job.

2. Lump-sum proposals need the Excel detailed budget table. Experts will read it, document their checks, and may recommend precise reallocations or decreases. Personnel costs are compared to a dashboard for reasonableness.

3. Do No Significant Harm (DNSH) and AI robustness are assessed only if the topic requires them. If not requested, don’t add extra sections.

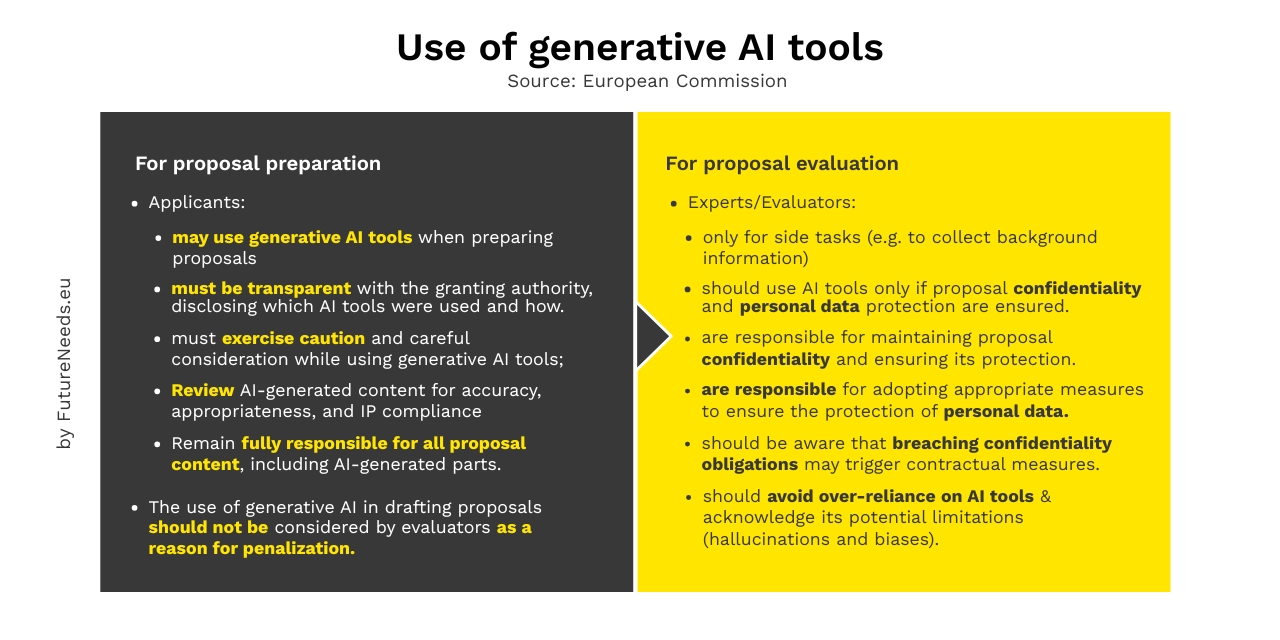

4. Using generative AI is allowed if you disclose tools and usage and you validate accuracy and IP. You remain responsible.

Why it matters now

With the first set of deadlines just around the corner, if you’re polishing Part B, the fastest way to gain points is to mirror how experts read:

- Align your text with the EC’s reading grid

- make outcome-to-impact links explicit and quantified and

- make sure your lump-sum table is internally consistent with Part B.

Small gaps here cost real points.

Steps / final-submission checklist

SUBMIT ALL MANDATORY PARTS/ANNEXES

Part A (web forms in the Funding & Tenders Portal)

- General information — proposal title, acronym, topic, type of action, short abstract.

- Participants & contacts — organisations (PICs), roles, contact details, declarations.

- Budget — estimated costs/shares by participant (for lump sum: shares per WP/beneficiary).

- Ethics & Security — complete the Ethics issues table (and brief self-assessment) and the Security questions if relevant.

- Other questions — any topic-specific items (e.g., clinical studies/trials block where applicable).

Part B (PDF upload — the technical description)

- Use the official template (B1: Excellence, B2: Impact, B3: Implementation).

- Respect the page limits stated in your template (commonly 45 pages for RIA/IA/CSA; some topics specify different limits, e.g., certain lump-sum templates).

Annexes (upload with Part B, only where applicable)

- Detailed budget table (Excel) for lump-sum topics (mandatory when the topic uses lump sum).

- Essential information for clinical studies/trials/investigations (official annex), if your work plan includes any clinical study/trial/investigation.

- Any topic-specific annexes explicitly requested on the topic page.

- (Do not attach extra materials like letters of support unless the topic explicitly asks for them.)

___

- Ensure all uploaded files are readable/printable and follow the required formatting and page-limit rules.

- Gender Equality Plan (GEP) is checked internally for eligibility; do not place GEP content in Part B.

- GEP is checked internally (not scored). Don’t place it in Part B.

If your topic is in the 2025 blind-evaluation pilot (stage-1):

- Remove all identifiers from abstract and Part B: no organisation names, acronyms, logos, personnel names, explicit location clues, or self-identifying phrases. Indirect identification can also be inadmissible.

Excellence (Part B1):

- State clear, measurable objectives aligned to the topic and beyond SOTA.

- Describe a sound methodology, including interdisciplinarity; address Open Science; integrate gender dimension and SSH where relevant (or justify non-relevance).

Indicative length: ≈ 14 for the methodology block alone, which typically makes Excellence ≈ 14–16 pages in total (numbers are indicative, not mandatory)

Impact (Part B2):

- Map project contributions to expected outcomes and destination impacts; quantify scale (how widespread) and significance (value of benefits).

- Identify barriers (technical, regulatory, market, behavioural) and mitigation.

- Provide concrete D&E&C actions during and after the project, with target groups, and a credible IP strategy.

Indicative length: ≈ 9 pages overall, with D&E&C (+ summary) often around ~5 pages of that.

Implementation (Part B3):

- Present a logical work plan with milestones/deliverables; align resources with tasks; specify risks and mitigations.

- Demonstrate consortium capacity (incl. OS/gender/SSH where appropriate), complementarity across the value chain, and access to needed infrastructure.

Indicative length: ~14 pages for the work plan & resources (incl. tables).

Lump-sum (if applicable):

- Upload the Excel detailed budget table (all sheets complete; PMs and splits match Part B tables).

- Ensure cost estimations are plausible, reasonable, necessary for the activities. Experts assess the table under Implementation and may recommend specific changes (EUR or %). Personnel costs are checked against the dashboard; evaluators must document their budget assessment in the ESR/CR.

Important limits (apply to the whole Part B): Title, List of participants, and Sections 1–3 together must stay within the overall page limit (typically 45 pages; 50 for lump-sum topics). Section lengths above are indicative only; the IT system enforces the total.

Additional questions (answer briefly and precisely):

- Scope fit, exceptional funding, hESC/hE, activities not eligible, exclusive civil use, Do No Significant Harm (DNSH), AI (as per topic).

Last-mile QA (48–72h before submission) to verify:

- criteria-to-text mapping is explicit

- numbers are consistent across Part A/B and the budget table

- blind text where required

- page limits respected

Example

A large-scale demo deploys an advanced forecasting system in three airports.

Expected outcome: adoption of innovative logistics solutions by the EU transport sector.

Project contribution: at least nine airports adopt the system post-project.

Destination impact: more seamless, smart, inclusive, sustainable mobility.

Quantified contribution: +15% maximum passenger capacity and +10% throughput, lowering expansion costs by ~28%.

(Use this pattern to map outcomes → impacts → scale & significance → barriers/mitigation → D&E&C → IP.)

Limitations / common misreads

- Topic specificity: Only add AI robustness/DNSH if required by the topic; otherwise address environmental/AI aspects appropriately within the criteria.

- Scoring vs. sub-aspects: Evaluators score criteria, not each sub-bullet; write to the entire criterion.

- Lump-sum consistency: Person-months and task splits must match Part B; experts may propose EUR/% changes and must record checks.

- GEP & ethics placement: GEP is checked internally (not scored); policy/eligibility questions are handled via additional form items.

FAQ

How are Horizon Europe proposals scored and ranked?

Each criterion gets 0–5; defaults: 3/5 per criterion, 10/15 overall. IA ranking uses Impact ×1.5. In stage-1, only Excellence and Impact are scored, each needing ≥4/5.

What exactly is “blind evaluation” at stage-1?

In selected two-stage calls, the abstract and Part B must contain no identifying information; direct/indirect identification leads to inadmissibility.

Do we need DNSH and an AI robustness subsection?

Only if the topic explicitly requires it (WP2025 simplification). Otherwise, do not add those sections.

How are lump-sum budgets evaluated?

Experts assess the detailed budget table under Implementation, must document their checks, and may recommend specific reallocations/decreases. Personnel costs are compared using the dashboard.

Can we use generative AI to draft?

Yes. Use of gen-AI is not a reason to penalise a proposal. You remain responsible to disclose tools, how they were used and review/validate outputs for accuracy and IP.

Bottom line

You win points when:

- You map contributions to topic outcomes and destination impacts

- Keep stage-1 texts blind where required

- Align work plan, risks, resources, and lump-sum table without inconsistencies.

Use the checklist above as your final pass before submission.

Sources (official, for download)

- Horizon Europe — Proposal Evaluation, Standard Briefing (Version 11.0, 15.07.2025). The single source of truth for this article. Download the EC briefing (PDF).

- Excel — Detailed budget table (lump sum), download the official template. (European Commission)

- Evaluation Form (HE RIA and IA) (European Commission)

- Standard evaluation Process

Need support with your Horizon Europe proposal?

Whether you’re preparing your first Horizon Europe proposal or aiming to improve your success rate, our team of experienced proposal writers can help you understand the evaluation criteria and craft a competitive submission. Get in touch for expert guidance and hands-on support: info@futureneeds.eu.

Follow us on LinkedIn to stay informed about the latest Horizon Europe and Erasmus+ developments and subscribe to our newsletter for expert highlights from the EU research and innovation landscape, delivered straight to your inbox.

About the authors

Anna Palaiologk, the founder of Future Needs, is a Research & Innovation Consultant with 18 years of experience in proposal writing and project management. She has worked as a project Coordinator and Work Package leader in 30+ EU projects and has authored 50+ successful proposals. Her research background is in economics, business development and policy-making. Email Anna at anna@futureneeds.eu.

Chariton Palaiologk, the Head of the EU Project Management Team, is currently leading the project management of 10+ EU-funded projects. He has a background in data analysis and resource optimisation, having worked at the Greek Foundation for Research and Technology. Email Chariton at chariton@futureneeds.eu.

Thanos Arvanitidis is a Researcher & Innovation Project Manager, with a background in physics and biomedical engineering. He manages EU-funded research projects from initial conception through to implementation, working across key Horizon Europe clusters, including Cluster 1: Health; Cluster 4: Digital, Industry & Space. His expertise spans AI, healthcare, cybersecurity, and digital education. Email Thanos at thanos@futureneeds.eu.